In part 1 of this series I introduced a script which consists of over 900 lines of PowerShell, although over 20% of that is comments, that ultimately just calls a single Windows API, namely SetProcessWorkingSetSizeEx , in order to make more memory available on a Windows computer by reducing the working set sizes of targeted processes. This is known as memory trimming but I’ve always had issue with this term since the dictionary definition of trimming means to remove a small part of something whereas default memory trimming, if we use a hair cutting analogy, is akin to scalping the victim.

This “scalping” of working sets can be counter productive since although more memory becomes available for other processes/users, the scalped processes quickly require some of this memory which has potentially been paged out which can lead to excessive hard page faults on a system, when the trimmed memory is mapped back to the processes, and thus performance degradation despite there being more memory available.

So how do we address this such that we actually do trim excessive memory from processes but leave sufficient for it to continue operating without needing to retrieve that trimmed memory? Well unfortunately it is not an exact science but there are options to the script which can help prevent the negative effects of over trimming. This is in addition to the inactivity points mentioned in part 1 where the user’s processes are unlikely to be active so hopefully shouldn’t miss any of their memory – namely idle, disconnected or when the screen is locked.

Firstly, there is the parameter -above which will only trim processes whose working set exceeds the value given. The script has a default of 10MB for this value as my experience points to this being a sensible value below which there is no benefit to trimming. Feel free to play around with this figure although not on a production system.

Secondly, there is the -available parameter which will only trim processes when the available memory is below the given figure which can be an absolute value such as 500MB or a percentage such as 10%. The available memory figure is the ‘Available MBytes’ performance counter in the ‘Memory’ category. Depending on why you are trimming, this option can be used to only trim when available memory is relatively low although not so low that Windows itself indiscriminately trims processes. If I was trying to increase the user density on a Citrix XenApp or RDS server then I wouldn’t use this parameter.

Thirdly, there is a -background option which will only trim the processes for the current user, so can only be used in conjunction with the -thisSession argument, which are not the foreground window, as returned by the GetForeGroundWindow API, where the theory is that the non-foreground windows are hosting processes which are not actively being used so shouldn’t have a problem with their memory being trimmed.

Lastly, we can utilise the working set limit feature built into Windows and accessed via the same SetProcessWorkingSetSizeEx API. Two of the parameters passed to this function are the minimum and maximum working set sizes for the process being affected. When trimming, or scalping as I tend to refer to it as, both of these are passed as -1 which tells Windows to remove as many pages as possible from the working set. However, when they are positive integers, this sets a limit instead such that working sets are adjusted to meet those limits. These limits can be soft or hard – soft limits effectively just apply that limit when the API is called but the limits can then be exceeded whereas hard limits can never be breached. We therefore can use soft limits to reduce a working set to a given value without scalping it. Hard limits can be used to cap processes that leak memory which will be covered in the next article although there is a video here showing it for those who simply can’t wait.

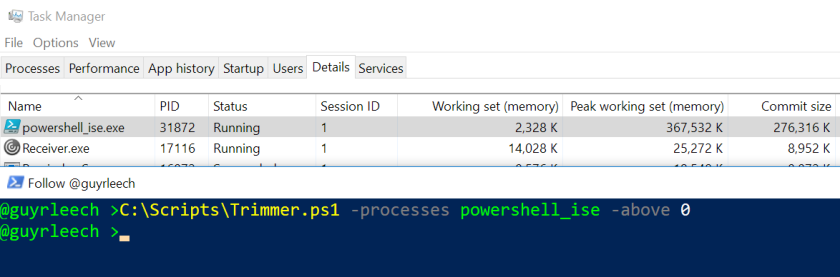

Here is a an example of using soft working set limits for an instance of the PowerShell_ISE process. We start with the process consuming around 286MB of memory as I have been using it (in anger, as you do!):

If we just use a regular trim, aka scalp, on it then the working set reduces to almost nothing:

The -above parameter is actually superfluous here but I thought I’d demonstrate its use although zero is not a sensible value to use in my opinion.

The -above parameter is actually superfluous here but I thought I’d demonstrate its use although zero is not a sensible value to use in my opinion.

However, having trimmed it, if I return to the PowerShell_ISE window and have a look at one of my scripts in it, the working set rapidly increases by fetching memory from the page file (or the standby list if it hasn’t yet been written to the page file – see this informative article for more information):

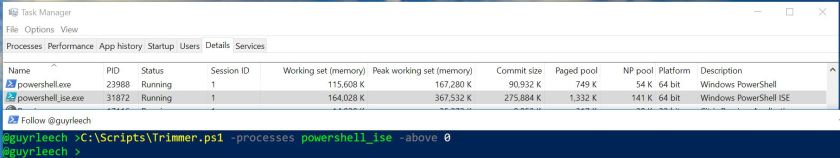

If I then actually run and debug a script the working set goes yet higher again. However, I then switch to Microsoft Edge, to write this blog post, so PowerShell_ISE is still open but not being used. I therefore reckon that a working set of about 160MB is ample for it and thus I can set that via the following where the OS trims the working set, by removing enough least recently used pages, to reach the working set figure passed to the SetProcessWorkingSetSizeEx API that the script calls:

However, because I have not also specified the -hardMax parameter then the limit is a soft one and therefore can be exceeded if required but I have still saved around 120MB from that one process working set trimming.

Useful but are you really going to watch to see what the “resting” working set is for every process? Well I know that I wouldn’t so use this last technique for your main apps/biggest consumers or just use one of the first three techniques. When I get some time, I may build this monitoring feature into the script so that it can trim even more intelligently but since the script is on GitHub here, please feel free to have a go yourself.

Next time in this series I promise that I’ll show how the script can be used to stop leaky processes from consuming more memory than you believe they should.

One thought on “Memory Control Script – Fine Tuning Process Memory Usage”